At Triton UAS, we compete globally to build autonomous drones capable of complex search-and-rescue missions. As the Computer Vision Lead, I architected the vision stack that allows our drone to identify targets, map terrain, and make decisions without human intervention.

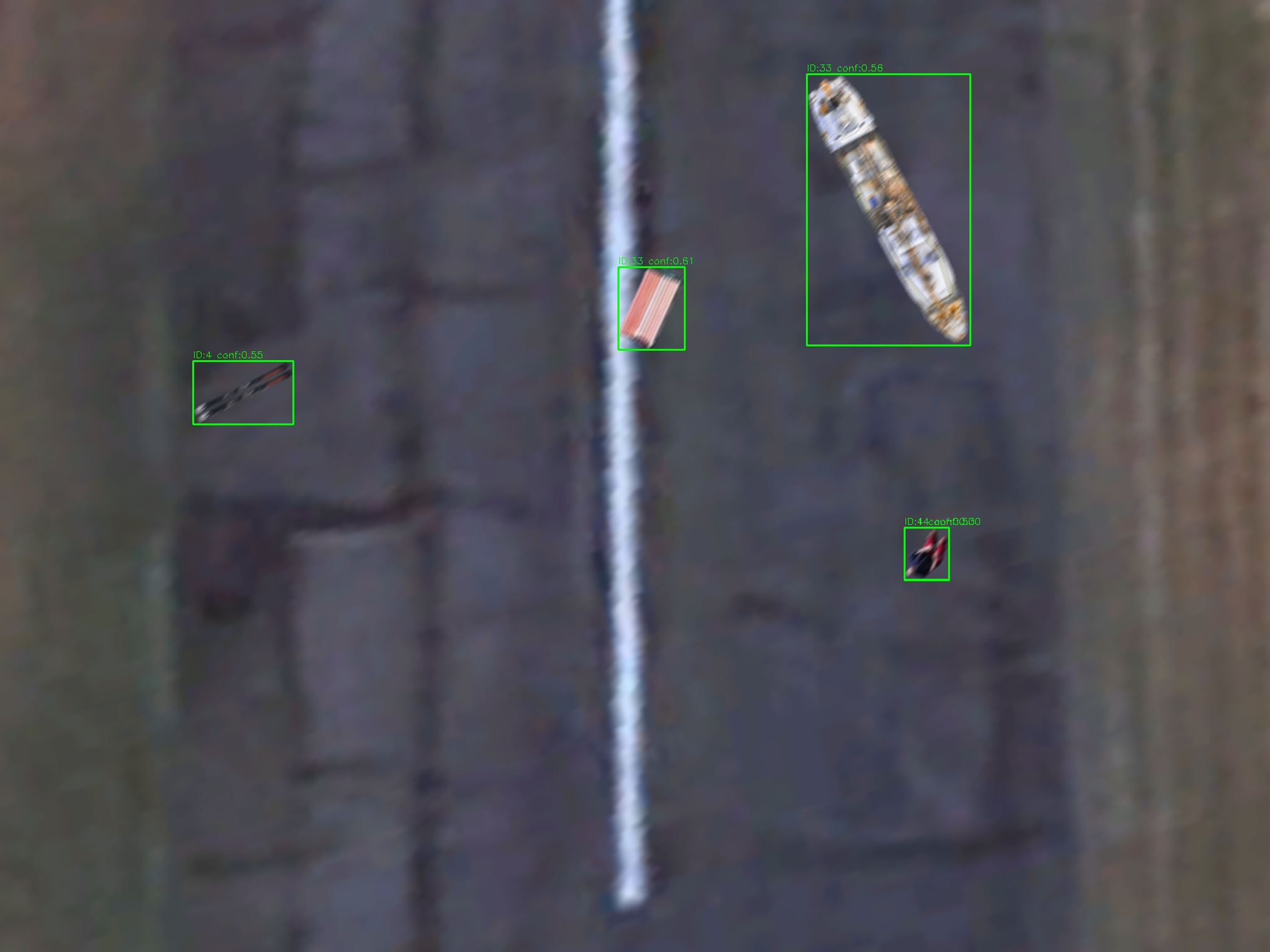

The Production Pipeline: I designed a high-throughput C++ inference pipeline centered around YOLO v11 and ONNX Runtime. It runs on an onboard Jetson Orin Nano, processing high-res aerial imagery in real-time. The system uses a custom asynchronous aggregation layer, utilizing thread pools and mutex-locked queues, to decouple image ingestion from inference, ensuring our flight control loop never stutters even under heavy compute loads.

Simulation & Synthetic Data: Real-world flight data is expensive and risky to acquire. To solve this, I built a synthetic data generation engine that creates photorealistic aerial imagery for training. Recently, I extended this system to function as a "mock camera" for SITL (Software In The Loop) simulations. This allows us to pipe synthetic video feeds directly into ArduPilot, validating our entire vision-to-geolocation stack in a virtual environment before we ever spin up a propeller.

Mapping & Geolocation: Beyond detection, the system performs precise geo-registration. I implemented a GSD-based localizer that projects 2D pixel detections into 3D global coordinates using flight telemetry. For terrain mapping, I built a dynamic stitching module capable of generating large-scale orthomosaics mid-flight, utilizing a multi-pass chunking strategy to manage memory constraints on the embedded hardware.

For a deeper dive into the components and mini-projects behind this work, see the Triton UAS CV wiki documentation I wrote.